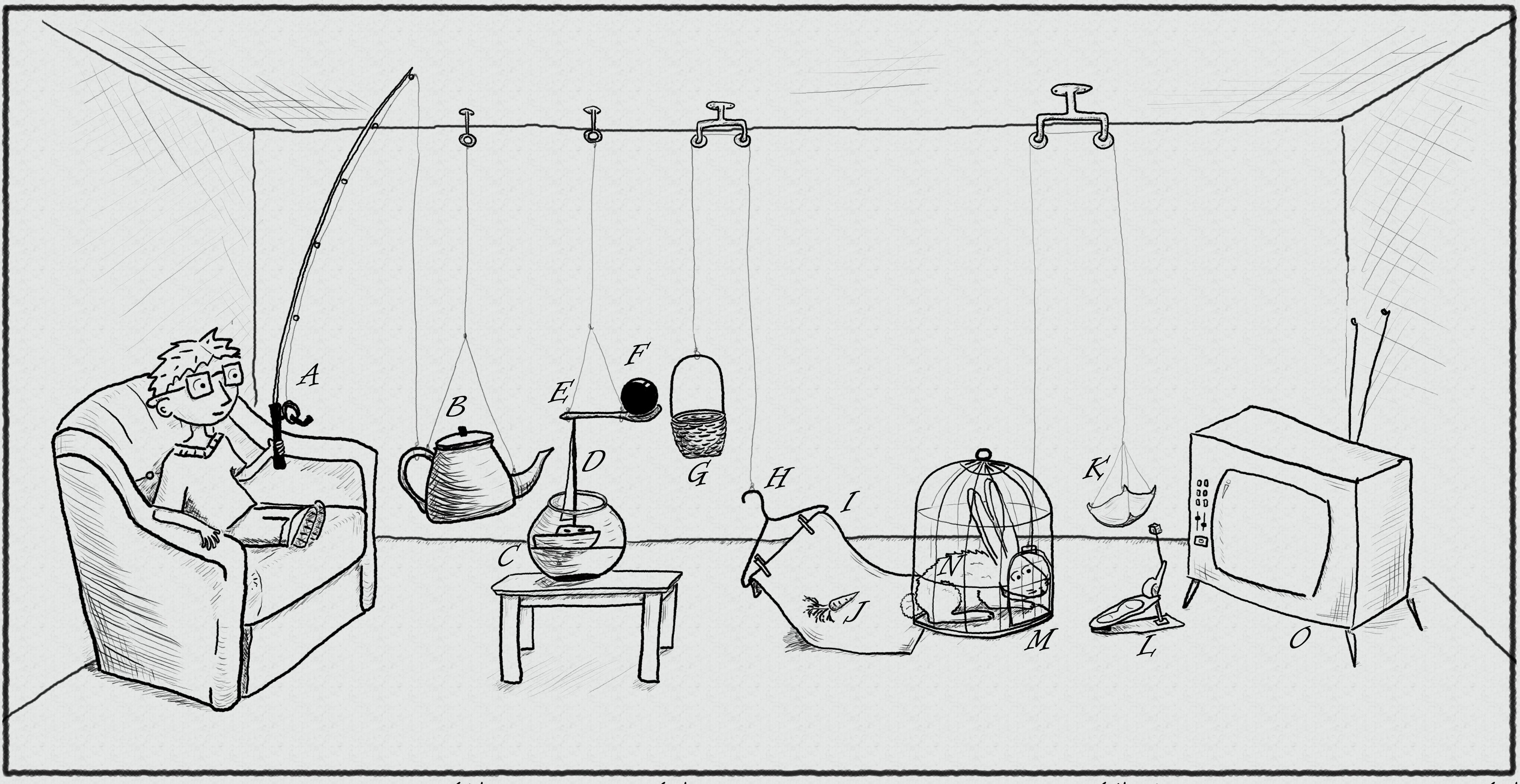

Many organizations collaborate by moving data and information between people, files or applications. While movement may provide analysts with the illusion of adding value, data movement is not the same thing as data sharing. While the latter is a good thing to be nurtured, the former causes version control problems, wasted time, and analyst frustration.

In our original post on why data analysts are so inefficient, we covered the six core causes of data waste. In today's post we'll tackle the first cause: conveyance. Below are five ways to reduce data movement and put the focus back on sharing.

1. Stop emailing (IMing, Skyping) files back and forth. Email and chat programs are some of the most popular ways to move data around. While we are all guilty of doing this every now and then, it creates huge access and version control problems for your team. Stop doing it. The most effective alternative is to utilize your company's sharing environment to store and update files. Don't have a sharing environment? Go to #4.

2. Eliminate "staging" files or sheets. A good general rule is: do not put data into a spreadsheet, database or file that does not add specific value. Many use staging files when restructuring or reformatting data before being used by someone or something else. Eliminating these types of files not only eliminates waste and confusion, but also ensures faster assembly of process documentation.

3. Be careful with "movement automation". This category can include FTP syncs, VBA macros or any other kind of automated process that pulls data from one location into another. FTP syncs often create timing bottlenecks (to be discussed in a future post) or create confusion among users. VBA macro code in Excel can cause chaos when there are structural changes in either the source or destination spreadsheets. In general, any kind of data automation must be handled with a high level of care and used only when the risk outweighs the amount of effort it takes to manually execute the task.

4. Create a sharing environment. There are many products for sharing data across organizations. Some upload files to share drives or use Sharepoint or Dropbox, while others will build custom SQL databases to store raw data. Some use Shooju. Whatever option you choose, ensuring that it is a complete solution is critical to creating any form of internal data harmony. Because of the confusion they can cause without full adoption, partial solutions can be worse than no solution at all.

5. Google it. Some organizations have no love lost for the cloud, while others are rapidly making the transition. Wherever your company is positioned, Google Drive can be an excellent solution to specific sharing problems. While seasoned data analysts may scoff at this idea, there are great ways to track changes and store raw data sets that streamline shared data flow.

A word about sharing across applications

The suggestions in this post are most effective when moving data between individuals using the same application - i.e. moving an Excel file to another Excel user. Sharing data across applications is much more difficult. In many organizations, analysts that use more advanced analytical tools like SAS often have to export results into Excel spreadsheets (creating a "staging problem") and email them (gasp!) to colleagues who are non-SAS users. Additionally, keeping this staging files updated can be a big pain.

Readers: what tips do you have to solve data sharing across applications? Leave them in the comments below.