|

| Image by Falkor, Krypt3ia |

In an attempt to produce better products, explore new markets and defeat competition, companies spend huge amounts of time and money to create data processes that empower researchers, analysts and data scientists. While these processes take many different forms, most companies still aren't using data efficiently, and much of the time and money they spend on data is wasted.

1. CONVEYANCE

Analysts and IT professionals waste time moving data back and forth between models, users and physical locations. Some examples of this include moving databases between users, linking spreadsheets, copying and pasting data from one location to another or syncing FTP sites to access data in multiple countries.

While many organizations try to address these needs by placing spreadsheets on mirrored shared drives or creating SQL databases, this often makes the data conveyance process both inflexible and unstable. One of our customers originally set up a series of five interconnected spreadsheet models that had a total process failure after one analyst moved his output data over just one column.

Here is a blog post on recommendations on how to reduce conveyance waste.

Analysts and IT professionals waste time moving data back and forth between models, users and physical locations. Some examples of this include moving databases between users, linking spreadsheets, copying and pasting data from one location to another or syncing FTP sites to access data in multiple countries.

While many organizations try to address these needs by placing spreadsheets on mirrored shared drives or creating SQL databases, this often makes the data conveyance process both inflexible and unstable. One of our customers originally set up a series of five interconnected spreadsheet models that had a total process failure after one analyst moved his output data over just one column.

Here is a blog post on recommendations on how to reduce conveyance waste.

2. INVENTORY

Collecting and holding unused data in inventory costs money. While the direct cost of storing data continues to fall, the human investment in updating or maintaining unused data as well as the opportunity cost of not using that data both continue to rise. Also, storing data that is not used or unusable can muddle the entire data ecosystem, making it harder to find the data you actually need.

The magnitude of inventory costs can vary, with examples ranging from the cost of preserving large, legacy databases all the way down to updating a small data set with superfluous data points. And while groups of all sizes face this issue, this problem is much more pronounced and costly within large organizations. One large ($10 billion +) energy company we talked to was spending millions a year to maintain and collect unused data whose value had not been determined.

Here is a blog post on recommendations on how to reduce inventory waste.

Collecting and holding unused data in inventory costs money. While the direct cost of storing data continues to fall, the human investment in updating or maintaining unused data as well as the opportunity cost of not using that data both continue to rise. Also, storing data that is not used or unusable can muddle the entire data ecosystem, making it harder to find the data you actually need.

The magnitude of inventory costs can vary, with examples ranging from the cost of preserving large, legacy databases all the way down to updating a small data set with superfluous data points. And while groups of all sizes face this issue, this problem is much more pronounced and costly within large organizations. One large ($10 billion +) energy company we talked to was spending millions a year to maintain and collect unused data whose value had not been determined.

Here is a blog post on recommendations on how to reduce inventory waste.

3. WAITING

"Waiting" can be classified into two core categories - human and technological. On the human side, many organizations that contain "data bureaucracies" can inadvertently create work process bottlenecks that waste enormous time and cause frustration for data users. Many of our customers have historically assigned analysts (or in some cases interns) to manage specific data sets only to find that when that person is busy, on vacation or has left the company the data is not updated.

When it comes to technology, many organizations have updating or ETL processes that refresh too infrequently. Because of the clunkiness of this type of setup, data users can be forced to wait for the data to update, which might not happen for another several hours or even days.

Here is a blog post on recommendations on how to reduce waiting time.

"Waiting" can be classified into two core categories - human and technological. On the human side, many organizations that contain "data bureaucracies" can inadvertently create work process bottlenecks that waste enormous time and cause frustration for data users. Many of our customers have historically assigned analysts (or in some cases interns) to manage specific data sets only to find that when that person is busy, on vacation or has left the company the data is not updated.

When it comes to technology, many organizations have updating or ETL processes that refresh too infrequently. Because of the clunkiness of this type of setup, data users can be forced to wait for the data to update, which might not happen for another several hours or even days.

Here is a blog post on recommendations on how to reduce waiting time.

4. OVER-PROCESSING

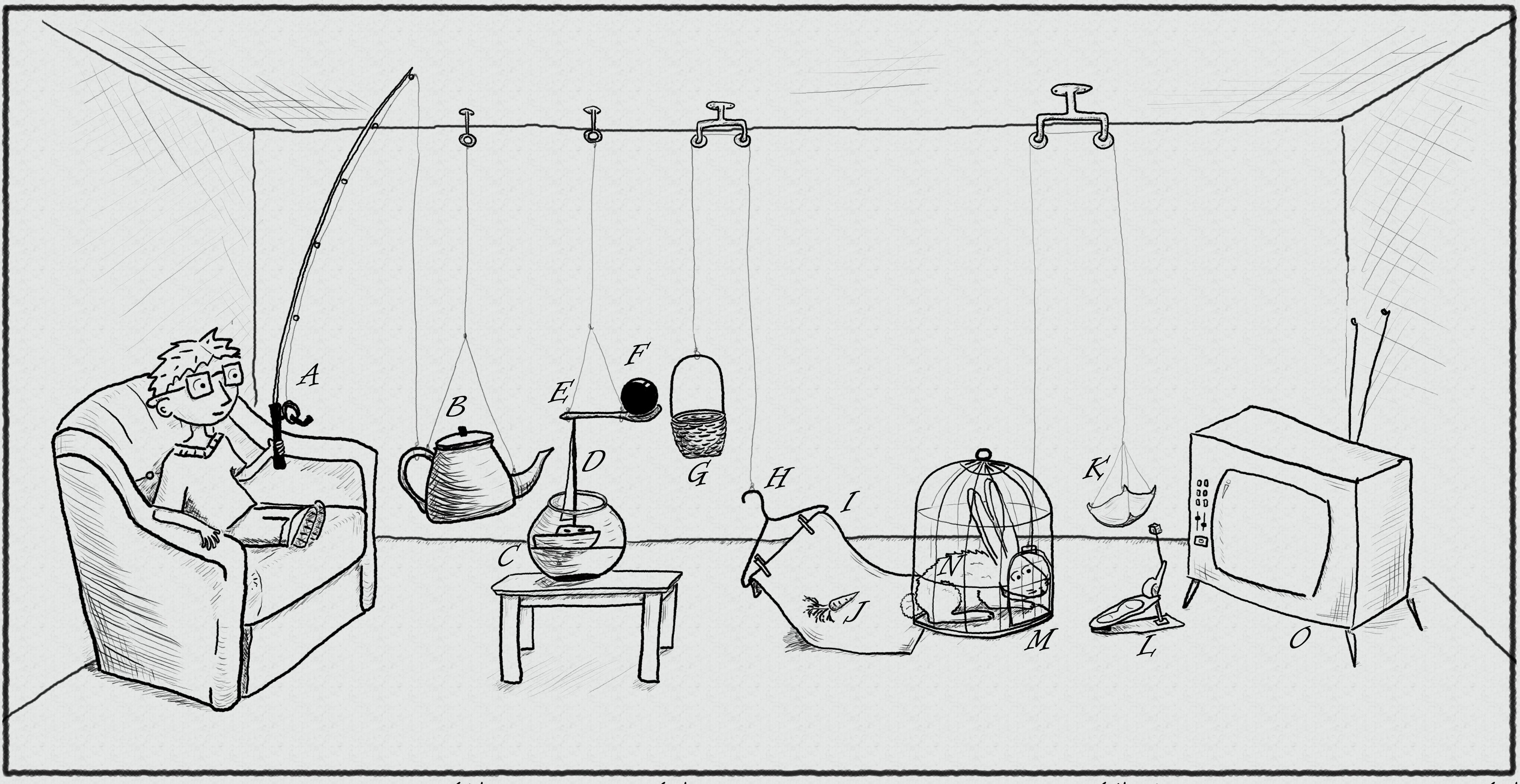

In the spirit of Rube Goldberg, data often go through too many processing steps on the way to being useful. While these steps are intended to save time, increase consistency or standardize inputs or outputs, many companies go too far.

We have seen countless companies create structures that move data from one spreadsheet or database to another (conveyance) that adds no value. These steps can drive huge inefficiencies by inflating storage requirements, documentation time and drawing an unnecessary amount of human intervention.

Here is a blog post on recommendations on how to reduce over-processing.

5. CORRECTION (ERRORS)

Speaking of over-processing, the more complex the data process, the more error prone the results become. While this is true with any process, the complexity of data systems combined with tight project deadlines can create the ideal conditions for a mistake.

Even though these mistakes are both common and costly, quality checks are not present enough in data processes. Prior to engaging them, one of our clients was forced to redact an entire presentation built on a forecast with an out of date set of assumptions, embarrassing the team and the company while costing them future work.

6. OPACITY

In the spirit of Rube Goldberg, data often go through too many processing steps on the way to being useful. While these steps are intended to save time, increase consistency or standardize inputs or outputs, many companies go too far.

We have seen countless companies create structures that move data from one spreadsheet or database to another (conveyance) that adds no value. These steps can drive huge inefficiencies by inflating storage requirements, documentation time and drawing an unnecessary amount of human intervention.

Here is a blog post on recommendations on how to reduce over-processing.

5. CORRECTION (ERRORS)

Speaking of over-processing, the more complex the data process, the more error prone the results become. While this is true with any process, the complexity of data systems combined with tight project deadlines can create the ideal conditions for a mistake.

Even though these mistakes are both common and costly, quality checks are not present enough in data processes. Prior to engaging them, one of our clients was forced to redact an entire presentation built on a forecast with an out of date set of assumptions, embarrassing the team and the company while costing them future work.

6. OPACITY

Any data process that does not have documentation and transparency can result in disaster for any organization. The trigger for these catastrophes can be changes in personnel (the point person leaves), infrequent updates (the point person forgets how to do it) or simply changes in requirements (the point person needs to change his or her process).

Having seen countless companies pay the price for opacity, we believe that creating a culture that supports a transparent set of data process documentation is critical to maintaining the implicit value and going concern of your business unit or organization. This issue is discussed our Top Five Business Modeling Pitfalls blog post as well.

While no single framework can fully capture all the challenges associated with data efficiency, understanding these six factors will help organizations develop more productive team members and higher quality results. Over the coming weeks, we will publish a post for each of these six factors that provide detailed examples and suggestions on how to reduce their cost and impact.

Really interesting article and the role I.T play could solve many of the issues you highlight. If you build a successful Data Exploration model and give the Analysts the right tool to have total freedom then many of the issues would disappear.

ReplyDeleteHi Andy - I think you hit the nail on the head. IT can solve it, but daily intervention must be rare to avoid creating yet another layer of process.

ReplyDeleteRetrieving data from tape storage often isn't as fast as with disk storage, but that isn't too large of concern in terms of Sarbanes Oxley compliance. Self Storage

ReplyDeleteI am extremely baffled by observing the entire bundle of information and I got the undertaking structure my boos to compress the huge information into the short frame and present this information to other colleagues so its huge issue for me. Then my companion enlightened me regarding this https://activewizards.com/ for getting the information researcher who can without much of a stretch short my information and change over into graphical frame effectively and subsequent to reaching them my issues is illuminated.

ReplyDeleteOne can go to a boundless number of educator drove online sessions from various coaches for 1 year at no extra expense. data science course in pune

ReplyDeleteI finally found great post here.I will get back here. I just added your blog to my bookmark sites. thanks.Quality posts is the crucial to invite the visitors to visit the web page, that's what this web page is providing.

ReplyDeleteData science course in mumbai

Such a very useful article. Very interesting to read this article. I have learn some new information.thanks for sharing. ExcelR

ReplyDeleteVery awesome!!! When I seek for this I found this website at the top of all blogs in search engine.

ReplyDeleteExcelR Data Analytics courses

Such a very useful article. Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article.

ReplyDeleteExcelR data analytics courses

Interesting post. I Have Been wondering about this issue, so thanks for posting. Pretty cool post.It 's really very nice and Useful post.I am interested in some of them.I hope you will give more information on this topics in your next articles.

ReplyDeleteData Science training

data analytics course

business analytic course

Attend The Machine Learning courses in Bangalore From ExcelR. Practical Machine Learning courses in Bangalore Sessions With Assured Placement Support From Experienced Faculty. ExcelR Offers The Machine Learning courses in Bangalore.

ReplyDeleteExcelR Machine Learning courses in Bangalore

We are located at :

Location 1:

ExcelR - Data Science, Data Analytics Course Training in Bangalore

49, 1st Cross, 27th Main BTM Layout stage 1 Behind Tata Motors Bengaluru, Karnataka 560068

Phone: 096321 56744

Hours: Sunday - Saturday 7AM - 11PM

Location 2:

ExcelR

#49, Ground Floor, 27th Main, Near IQRA International School, opposite to WIF Hospital, 1st Stage, BTM Layout, Bengaluru, Karnataka 560068

Phone: 070224 51093

Hours: Sunday - Saturday 7AM - 10PM

Great post i must say and thanks for the information. Education is definitely a sticky subject. However, is still among the leading topics of our time. I appreciate your post and look forward to more.

ReplyDeletemachine learning course

artificial intelligence course in mumbai

Really awesome blog!!! I really enjoyed reading this article. Thanks for sharing valuable information.

ReplyDeleteData Science Course in Marathahalli

Data Science Course Training in Bangalore

It is perfect time to make some plans for the future and it is time to be happy. I've read this post and if I could I desire to suggest you some interesting things or suggestions. Perhaps you could write next articles referring to this article. I want to read more things about it!

ReplyDeletedata science course

360DigiTMG

The information provided on the site is informative. Looking forward more such blogs. Thanks for sharing .

ReplyDeleteArtificial Inteligence course in Faridabad

AI Course in Faridabad

I just stumbled upon your blog and wanted to say that I have really enjoyed reading your blog posts. ExcelR Data Scientist course In Pune Any way I’ll be subscribing to your feed and I hope you post again soon. Big thanks for the useful info.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteGreat post i must say and thanks for the information.

ReplyDeleteData Science Course in Hyderabad

I have express a few of the articles on your website now, and I really like your style of blogging. I added it to my favorite’s blog site list and will be checking back soon…

ReplyDeleteData Science Classes in Pune Super site! I am Loving it!! Will return once more, Im taking your food likewise, Thanks.

Really nice and interesting post. I was looking for this kind of information and enjoyed reading this one. Keep posting. Thanks for sharing.

ReplyDeleteartificial intelligence course in bangalore

Great post I would like to thank you for the efforts you have made in writing this interesting and knowledgeable article.

ReplyDeleteData Science Course in Bangalore

https://lybeautifu.blogspot.com/2018/04/milan-court-of-u11-of-first-instance.html?showComment=1595872612608#c7817618142395763973

ReplyDeleteBest Data Science Courses in Bangalore

This is such a great resource that you are providing and you give it away for free.

ReplyDeleteData Science Course in Bangalore

I really appreciate this wonderful post that you have provided for us. I assure this would be beneficial for most of the people.

ReplyDeleteData Science Training in Bangalore

Really nice and interesting post. I was looking for this kind of information and enjoyed reading this one. Keep posting. Thanks for sharing

ReplyDeletebest data science courses in mumbai

ReplyDeleteReally impressed! Everything is very open and very clear clarification of issues. It contains truly facts. Your website is very valuable. Thanks for sharing.

cyber security course training in guduvanchery

IT Company

ReplyDeleteIT Company

IT Company

IT Company

IT Company

IT Company

IT Company

IT Company

very well explained. I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

ReplyDeleteLogistic Regression explained

Correlation vs Covariance

Simple Linear Regression

data science interview questions

KNN Algorithm

wonderful article. I would like to thank you for the efforts you had made for writing this awesome article. This article resolved my all queries. data science courses

ReplyDeleteFascinating post. I Have Been considering about this issue, so thankful for posting. Totally cool post.It 's very generally very Useful post.Thanks

ReplyDeletedigital marketing course

Hello there, I sign on to your new stuff like each week. Your humoristic style is clever, keep it up

ReplyDeletemaster in data science malaysia

Incredibly conventional blog and articles. I am realy very happy to visit your blog. Directly I am found which I truly need. Thankful to you and keeping it together for your new post.

ReplyDelete360DigiTMG digital marketing courses

Incredibly conventional blog and articles. I am realy very happy to visit your blog. Directly I am found which I truly need. Thankful to you and keeping it together for your new post.

ReplyDelete360DigiTMG data scientist certification

Thanks for sharing amazing blog. I am really happy to read your blog. Thanks for sharing your blog.

ReplyDeleteAndroid Training in Tambaram

Android Training in Anna Nagar

Android Training in Velachery

Android Training in T Nagar

Android Training in Porur

Android Training in OMR

It is perfect chance to make a couple of game plans for the future and the opportunity has arrived to be sprightly. I've scrutinized this post and if I may I have the option to need to suggest you some interesting things or recommendations. Perhaps you could create next articles insinuating this article. I have to examine more things about it!

ReplyDeletehttps://360digitmg.com/course/certification-program-in-data-science

Great information. The above content is very interesting to read. This will be loved by all age groups.

ReplyDeletelist to string python

data structures in python

polymorphism in python

python numpy tutorial

python interview questions and answers

convert list to string python

I feel appreciative that I read this. It is useful and extremely educational and I truly took in a ton from it.

ReplyDeletedata scientist training

Stunning! Such an astonishing and supportive post this is. I incredibly love it. It's so acceptable thus wonderful. I am simply astounded.

ReplyDeletehttps://360digitmg.com/course/certification-program-in-data-science

Stunning! Such an astonishing and supportive post this is. I incredibly love it. It's so acceptable thus wonderful. I am simply astounded.

ReplyDeletehttps://360digitmg.com/course/certification-program-on-digital-marketing

I think I have never watched such online diaries ever that has absolute things with all nuances which I need. So thoughtfully update this ever for us.

ReplyDeletehttps://360digitmg.com/course/data-analytics-using-python-r

Nice content and interesting blog. Join 360digitmg for the data scientist course.

ReplyDeletehttps://360digitmg.com/data-science-course-training-in-hyderabad

I feel really happy to have seen your webpage and look forward to so many more entertaining times reading here. Thanks once more for all the details.

ReplyDeleteData Science Training in Hyderabad

It is perfect time to make some plans for the future and it is time to be happy. I've read this post and if I could I desire to suggest you some interesting things or suggestions. Perhaps you could write next articles referring to this article. I want to read more things about it!

ReplyDeleteData Science Course in Pune

ReplyDeleteI am glad that i found this page ,Thank you for the wonderful and useful posts enjoyed reading it ,i would like to visit again.

Data Science Course in Mumbai

Truly, this article is really one of the very best in the history of articles. I am a antique ’Article’ collector and I sometimes read some new articles if I find them interesting. And I found this one pretty fascinating and it should go into my collection. Very good work!

ReplyDeleteData Science Course in Pune

What a really awesome post this is. Truly, one of the best posts I've ever witnessed to see in my whole life. Wow, just keep it up.

ReplyDeleteData Science Course in Pune

Your content is very unique and understandable useful for the readers keep update more article like this.

ReplyDeletedata scientist course

I am a new user of this site, so here I saw several articles and posts published on this site, I am more interested in some of them, hope you will provide more information on these topics in your next articles.

ReplyDeleteData Science Classes in Pune

It is late to find this act. At least one should be familiar with the fact that such events exist. I agree with your blog and will come back to inspect it further in the future, so keep your performance going.

ReplyDeleteData Science In Bangalore

Going to graduate school was a positive decision for me. I enjoyed the coursework, the presentations, the fellow students, and the professors. And since my company reimbursed 100% of the tuition, the only cost that I had to pay on my own was for books and supplies. Otherwise, I received a free master’s degree. All that I had to invest was my time.

ReplyDeletedata scientist course

very informative blog

ReplyDeleteData Scientist Course

Mua vé tại đại lý vé máy bay Aivivu, tham khảo

ReplyDeleteVé máy bay đi Mỹ

mua vé máy bay từ mỹ về việt nam

giá vé máy bay pacific airlines hải phòng sài gòn

chuyến bay từ hcm ra hà nội

lịch bay từ vinh đi nha trang

Thanks for your nice post I really like it and appreciate it. My work is about Custom Vape Cartridge Boxes. If you need perfect quality boxes then you can visit our website.

ReplyDeletewm casino

ReplyDeleterespect55

เรียนภาษาอังกฤษ

poker

xxx

xxx

xxx ไทย

Nice Blog,

ReplyDeleteDigital Marketing Training in KPHB at Digital Brolly

Very good message. I stumbled across your blog and wanted to say that I really enjoyed reading your articles. Anyway, I will subscribe to your feed and hope you post again soon.

ReplyDeleteData Science Training in Pune

With so many books and articles appearing to usher in the field of making money online and further confusing the reader on the real way to make money.

ReplyDeleteData Science Certification in Bangalore

Bespoke packaging boxes At Bespoke Packaging UK we strongly believe in the interests of bespoke packaging, which has multiple benefits

ReplyDeleteIt's good to visit your blog again, it's been months for me. Well, this article that I have been waiting for so long. I will need this post to complete my college homework, and it has the exact same topic with your article. Thanks, have a good game.

ReplyDeleteData Science Course in Pune

Impressive. Your story always bring hope and new energy. Keep up the good work.

ReplyDeletebest data science institute in hyderabad

VISTATOTO

ReplyDeleteVISTATOTO

VISTATOTO

VISTATOTO

VISTATOTO

VISTATOTO

The AWS certification course has become the need of the hour for freshers, IT professionals, or young entrepreneurs. AWS is one of the largest global cloud platforms that aids in hosting and managing company services on the internet. It was conceived in the year 2006 to service the clients in the best way possible by offering customized IT infrastructure. Due to its robustness, Digital Nest added AWS training in Hyderabad under the umbrella of other courses

ReplyDeletePython Training in Bangalore Offered by myTectra is the most powerful Python Training ever offered with Top Quality Trainers, Best Price, Certification, and 24/7 Customer Care.

ReplyDeletehttps://www.mytectra.com/python-training-in-bangalore.html

The content that I normally go through nowadays is not at all in parallel to what you have written. It has concurrently raised many questions that most readers have not yet considered.

ReplyDeleteData Science Training in Hyderabad

Data Science Course in Hyderabad

The content that I normally go through nowadays is not at all in parallel to what you have written. It has concurrently raised many questions that most readers have not yet considered.

ReplyDeleteData Science Training in Hyderabad

Data Science Course in Hyderabad

It is late to find this act. At least one should be familiar with the fact that such events exist. I agree with your blog and will come back to inspect it further in the future, so keep your performance going.

ReplyDeleteData Analytics Course in Bangalore

It took me a while to read all the reviews, but I really enjoyed the article. This has proven to be very helpful to me and I'm sure all the reviewers here! It's always nice to be able to not only be informed, but also have fun!

ReplyDeleteDigital Marketing Course in Bangalore

I don't have time to read your entire site right now, but I have bookmarked it and added your RSS feeds as well. I'll be back in a day or two. Thank you for this excellent site.

ReplyDeleteDigital Marketing Course in Bangalore

First You got a great blog .I will be interested in more similar topics. I see you have really very useful topics, i will be always checking your blog thanks.

ReplyDeletedigital marketing courses in hyderabad with placement

An awesome blog thanks a lot for giving me this great opportunity to write on this.

ReplyDeletecombo cách ly trọn gói 14 ngày HCM

xe taxi sân bay

xin visa Hàn Quốc

Lệ phí xin visa Nhật Bản

vé máy bay đi mỹ giá rẻ

dai ly ve may bay vietjet

Very informative post ! There is a lot of information here that can help any business get started with a successful social networking campaign !

ReplyDeletebest data science institute in hyderabad

I am reading your post from the beginning, it was so interesting to read & I feel thanks to you for posting such a good blog, keep updates regularly.I want to share about

ReplyDeletebest data science course online

Your content is very unique and understandable useful for the readers keep update more article like this.

ReplyDeletedata scientist course in pune

A great website with interesting and unique material what else would you need.

ReplyDeletedigital marketing courses in hyderabad with placement

I just found this blog and have high hopes for it to continue. Keep up the great work, its hard to find good ones. I have added to my favorites. Thank You.

ReplyDeletedata scientist course

Thanks for sharing nice information....

ReplyDeletedata science training in pune

Good Post! , it was so good to read and useful to improve my knowledge as an updated one, keep blogging.

ReplyDeletevé máy bay từ mỹ về việt nam giá rẻ

lịch bay từ úc về việt nam hôm nay

gia ve may bay tu han quoc ve viet nam

Tra ve may bay gia re tu Nhat Ban ve Viet Nam

mua ve may bay gia re tu Dai Loan ve Viet Nam

chuyến bay giải cứu Canada 2021

Wow, amazing post! Really engaging, thank you.

ReplyDeletebest machine learning course in aurangabad

Thanks for Sharing This Article.It is very so much valuable content.

ReplyDeletegiá vé máy bay từ california về việt nam

lich bay tu duc ve viet nam

chuyến bay cuối cùng từ anh về việt nam

vé máy bay từ úc về việt nam

Ve may bay Bamboo tu Dai Loan ve Viet Nam

thông tin chuyến bay từ canada về việt nam

Impressive. Your story always brings hope and new energy. Keep up the good work.

ReplyDeletebest data science institute in hyderabad

I would also motivate just about every person to save this web page for any favorite assistance to assist posted the appearance.

ReplyDeletedata scientist training and placement

Ad tech jobs Wow, cool post. I'd like to write like this too - taking time and real hard work to make a great article... but I put things off too much and never seem to get started. Thanks though.

ReplyDeleteI enjoyed the coursework, the presentations, the classmates and the teachers. And because my company reimbursed 100% of the tuition, the only cost I had to pay on my own was for books and supplies. Otherwise, I received a free master's degree. All I had to invest was my time.

ReplyDeleteBest Data Science Courses in Bangalore

I enjoyed the coursework, the presentations, the classmates and the teachers. And because my company reimbursed 100% of the tuition, the only cost I had to pay on my own was for books and supplies. Otherwise, I received a free master's degree. All I had to invest was my time.

ReplyDeleteDigital Marketing Course in Bangalore

Are you seeking the simplest SEO COMPANY DELHI? We help brands to optimize their online marketing performance. We promote your brands by increasing their visibility on social media to all or any over the world. Our aim is to produce a better quality performance for our client. Our dedicated team includes expert members having advanced skills.

ReplyDeleteI am looking for and I love to post a comment that "The content of your post is awesome" Great work!

ReplyDeletedata scientist training in malaysia

I’m excited to uncover this page. I need to to thank you for ones time for this particularly fantastic read !! I definitely really liked every part of it and i also have you saved to fav to look at new information in your site.giá vé máy bay từ california về việt nam

ReplyDeleteĐặt vé online từ đài loan về việt nam rẻ nhất

vé máy bay từ đức về sài gòn

vé máy bay từ san francisco về việt nam

thông tin chuyến bay từ canada về việt nam

chuyến bay từ anh về việt nam

Nice Blog.

ReplyDeleteClinical Research and Pharmacovigilance Course Certification Training Institute In Hyderabad

Gratisol labs provides Clinical Research course and it is a leading Clinical Research training Institute providing Clinical Research Certification Coures . This Clinical Research Certification Course is covered with 4 Modules of Certification. Pharmacovigilance Certification Training, Clinical Data Management Certification Training,Regulatory Affairs Course and Medical Writing course and SAS Certification Training.

link text

link text

link text

link text

link text

link text

I see some amazingly important and kept up to length of your strength searching for in your on the site

ReplyDeletedata science course in malaysia

You have written a lovely article; the importance of education is now spreading fire between people, especially in underdeveloped countries. Get an Online Class Software to simplify education.

ReplyDeleteFirst and foremost, our agents use common sense and they have good judgment. Their senses are fine-tuned bodyguard company

ReplyDeleteto help them spot dangerous situations and make correct decisions quickly before anyone is harmed.

This post is very simple to read and appreciate without leaving any details out. Great work!

ReplyDeleteai training in aurangabad

Thanks for posting the best information and the blog is very good and the blog is very good.digital marketing institute in hyderabad

ReplyDeleteNice article with valuable information. Thanks for sharing.

ReplyDeletePython Training in Bangalore | Python Online Training

Artificial Intelligence Training in Bangalore | Artificial Intelligence Online Training

Data Science Training in Bangalore | Data Science Online Training

Machine Learning Training in Bangalore | Machine Learning Online Training

AWS Training in bangalore | AWS Training

UiPath Training in Bangalore | UiPath Online Training

This article is probably where I got the most useful information for my research. Thanks for posting, we can find out more about this. Do you know of any other websites on this topic?

ReplyDeleteIoT Course

I want to leave a little comment to support and wish you the best of luck.we wish you the best of luck in all your blogging enedevors

ReplyDeletecyber security course fees in delhi

Very good message. I came across your blog and wanted to tell you that I really enjoyed reading your articles.

ReplyDeleteData Scientist Course Syllabus

Impressive!Thanks for the post. The primary goal of a data analyst is to increase efficiency and improve performance by discovering patterns in data. Data Analytics

ReplyDeleteI would like to thank you for the efforts you have made in writing this article. I am hoping for the same best work from you in the future as well..

ReplyDeletebest digital marketing course in hyderabad

I'm always looking online for articles that can help me. I think you also made some good comments on the functions. Keep up the good work!

ReplyDeleteBusiness Analytics Course in Nashik

Very informative message! There is so much information here that can help any business start a successful social media campaign!

ReplyDeleteData Scientist Course in Kolkata

Great information .It is very useful . Java Vogue have good examples on java . You have done a great job.

ReplyDeleteThis is really very nice post you shared, i like the post, thanks for sharing..

ReplyDeletefull stack development course

All things considered I read it yesterday yet I had a few musings about it and today I needed to peruse it again in light of the fact that it is very elegantly composed.

ReplyDelete360DigiTMG, the top-rated organisation among the most prestigious industries around the world, is an educational destination for those looking to pursue their dreams around the globe. The company is changing careers of many people through constant improvement, 360DigiTMG provides an outstanding learning experience and distinguishes itself from the pack. 360DigiTMG is a prominent global presence by offering world-class training. Its main office is in India and subsidiaries across Malaysia, USA, East Asia, Australia, Uk, Netherlands, and the Middle East.

ReplyDeleteI curious more interest in some of them hope you will give more information on this topics in your next articles.

ReplyDeletecyber security course

I truly appreciate essentially perusing the entirety of your weblogs. Basically needed to advise you that you have individuals like me who value your work. Certainly an extraordinary post. Caps off to you! The data that you have given is useful. data science course in Varanasi

ReplyDeleteWhen one thinks about data science, there might be word machine learning comes into the mind. Nowadays, machine learning has gained much more importance in data science, and data scientists have witnessed machine learning as a valuable tool in the various data analysis processes. Our Data Science certification training with a unique curriculum and methodology helps you to get placed in top-notch companies. Avail all the benefits and become a champion. Data Science is a dynamic domain with a promising future, start your Data Science Course today with 360DigiTMG and become a Data Scientist without hassle. Data science comprises of various necessary processes. One of the essential things on which data science is centered is the programming languages. With the broad discussion on the differences of both languages, you can indeed note which is easy to learn.business analytics course in nashik

ReplyDeleteIndustries generating tons of data and now moving towards digitization and automation to create various job opportunities for Data professionals. Sign up for the Data science courses in Marathahalli and avail benefits of attending sessions that are conducted using real-life case studies and projects which are accompanied by extra assignments. The content is designed to match the growing market needs to keep you updated with the latest skills and tools used in this ever-evolving field.

ReplyDeleteData Scientist Course in Delhi

Data analysis and data mining are used for this purpose. You might be new in this field, so you have no idea about these terms.

ReplyDeleteWith decision making becoming more and more data-driven, learn the skills necessary to unveil patterns useful to make valuable decisions from the data collected. Also, get a chance to work with various datasets that are collected from various sources and discover the relationships between them. Ace all the skills and tools of Data Science and step into the world of opportunities with the Best Data Science training institutes in Bangalore.

ReplyDeleteData Science Course in Bangalore with Placement

360DigiTMG is the top-ranked and the best Data Science Course Training Institute in Hyderabad..

ReplyDeletedata analytics course in lucknow

With decision making becoming more and more data-driven, learn the skills necessary to unveil patterns useful to make valuable decisions from the data collected. Also, get a chance to work with various datasets that are collected from various sources and discover the relationships between them. Ace all the skills and tools of Data Science and step into the world of opportunities with the Best Data Science training institutes in Bangalore.

ReplyDeleteData Scientist Course in Delhi

SMM PANEL

ReplyDeleteSMM PANEL

iş ilanları

İnstagram takipçi satın al

Hirdavatci burada

HTTPS://WWW.BEYAZESYATEKNİKSERVİSİ.COM.TR

servis

TİKTOK PARA HİLESİ İNDİR

Your work is very good and I appreciate you and hopping for some more informative posts

ReplyDeletefull stack developer course with placement

Mẫu nhà biệt thự vườn 1 tầng kiểu châu Âu đẹp

ReplyDeleteBàn trang điểm nhựa giả gỗ

Những mẫu nhà cấp 4 mái tôn 3 phòng ngủ đẹp cực đáng xây

Toàn cảnh thiết kế nhà phố 2 tầng mái thái thoáng rộng

Mẫu biệt thự vườn mái Nhật đẹp thanh tao số 2

Mẫu thiết kế nội thất nhà cấp 4 2 phòng ngủ

ReplyDeleteSàn gỗ tự nhiên hay là gỗ công nghiệp

Cách xử lý khi bồn cầu xả nước chậm

Chi phí xây biệt thự 2 tầng kiểu Mỹ

Nhà cấp 4 mái tôn 5×20

Sơn nhà có nên dùng màu duy nhất

Các trang trí kệ trong bếp

Great article, I have learned so many things. If you want to know more about business analyst, so business analyst course in Mumbai is the best to know.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDelete

ReplyDeleteAmazing article thank you for sharing this interesting and informative content and this site also has good articles on data analytics if you want to learn data analytics then you can visit our site we provide data analytics course in jabalpur faridabad noida, gurgaon, delhi, pune and all cities in india.

Grateful to find this post. Best Data Science Course Institute In Chennai

ReplyDeleteI want to thank the writer for their sincere efforts. Best Data Science Institute In Chennai With Placement

ReplyDeleteHi! I just wanted to drop by and let you know how much I enjoyed reading your recent blog article. Your writing style is engaging and the content you shared was both informative and thought-provoking. I found myself nodding along and gaining new insights as I went through each paragraph. Keep up the fantastic work! I'm definitely looking forward to reading more from you.

ReplyDeleteData Science Course in Vizag

Great blog post! I found your insights on the topic to be really helpful and informative. Your writing style is engaging and easy to follow, making it a pleasure to read. Thanks for sharing valuable resources Besides this, if you want to learn about Data Analyst enroll now in the best

ReplyDeleteData Analytics Course in Mohali, Delhi, Noida, Allahabad, Jabalpur, Faridabad, Gurgaon, and all cities in India

This is one of the great blog. very nice and creative information.iam really impressive your topic.Thanks for sharing.

ReplyDeleteData Analytics certification Training Institute in Ratnagiri

ReplyDeleteIt's a really very useful blog for learners. I liked your to convincing besides if you want to know more about data science you can click hereTop 10 Essential Skill Sets For Data Scientists

I appreciate the emphasis on the ethical considerations and responsible use of data in this blog post.

ReplyDeleteKickstart your career by enrolling in this Data Science Internship For Freshers

famous movies onlineเว็บดูหนังออนไลน์

ReplyDelete360DigiTMG is a great place to start your career in the domain of data science and data analytics. This post helped me to learn about how to design and execute automated business Analytics workflow by becoming a certified data scientist. By the end of the course, you will design a fully automated workflow and master all the fundamentals of data science.

ReplyDeleteI think this article is a valuable reminder of the common inefficiencies in data analysis processes and how they can be addressed. Identifying and tackling issues such as conveyance, inventory, waiting, over-processing, errors, and opacity is crucial for improving data utilization and efficiency. Looking forward to the upcoming posts with solutions!

ReplyDeleteData Analytics Courses in Nashik

I am very happy to read this article. Thanks for giving us Amazing info. Unlock your full potential with our expert-led English tuition classes at Ziyyara Edutech. Our online English tuition offers personalized learning, interactive sessions, and comprehensive support.

ReplyDeleteFor more info visit English tuition online

Data analysts may sometimes appear inefficient due to various challenges they face, such as dealing with large and complex datasets, the need for data cleaning and preprocessing, and navigating through ever-evolving tools and technologies. However, their role is essential in extracting valuable insights from data. Organizations can support analysts by providing adequate resources, training, and streamlined processes to improve efficiency and the overall impact of data analysis efforts.

ReplyDeleteData Analytics Courses In Kochi

This insightful article highlights the common inefficiencies in data processes and offers practical solutions to enhance productivity. A valuable resource for organizations striving to optimize their data utilization.

ReplyDeleteData Analytics Courses In Dubai

Being a data analytics aspirant, this post has been a great help to me. I stumbled upon this article when I was looking for a pathway to become a certified data analyst looking for an accredited data analytics course. This site contains an extraordinary material collection that 360DigiTMG courses.

ReplyDeletei never know the use of adobe shadow until i saw this post.ปลาฉลามวาฬ

ReplyDeleteThis smart article identifies typical data processing inefficiencies and provides workable methods to boost efficiency. A useful tool for firms looking to maximise the use of their data.

ReplyDeleteData Analytics Courses in Agra

Your post raises important questions about the challenges faced by data analysts in their daily work. It's a topic that resonates with many professionals in the field of data analytics. Thanks for sharing.

ReplyDeleteData Analytics Courses In Chennai

Very well explained.Thanks for sharing it with us.

ReplyDeletealso,check Data science training in Nagpur

Learn many things from your blog, great work, keep shining and if you are intresting in data engineering then checkout my blog data science course in satara

ReplyDeletenice blog

ReplyDeleteData Analytics Courses In Vadodara

Great the blog post effectively highlights the challenges faced by data analysts and the factors contributing to their inefficiency. Thanks for sharing valuable insights.

ReplyDeleteDigital Marketing Courses in Italy

Thanks for sharing detailed overview on the topic Why are data analysts so inefficient.

ReplyDeletedata analyst courses in limerick

Great experience I got good information from your blog. Tackle reading challenges head-on with our top-notch online phonics classes designed to eliminate common challenges in literacy development.

ReplyDeletevisit best phonics classes online

thanks for sharing such well explained blog, very insightful

ReplyDeleteDigital marketing business

In the technology diverse data sources and complex analytical tasks contribute to the challenges analysts face. Investing in continuous learning collaborative environment. the embracing innovative tools are key steps toward improving efficiency in data analysis.

ReplyDeleteDigital marketing courses in city of Westminster

By diving into the nuances of data analysis workflows, the ever-evolving technological landscape, and the demands for precision and accuracy, we'll debunk the notion of inherent inefficiency. Instead, we'll highlight the dedication, expertise, and meticulousness that data analysts bring to the table.

ReplyDeletedivorce lawyers spotsylvania va

Thank you for sharing insightful information on Why are data analysts so inefficient.

ReplyDeleteInvestment banking training Programs

Thanks for sharing it. I always enjoy reading such superb content with valuable information. The ideas presented are excellent and really cool, making the post truly enjoyable. Keep up the fantastic work.

ReplyDeleteVisit: Elite Java: Crafting Code Like A Pro

"The greatest data science courses available in Malaysia are provided by 360DigiTMG. Enroll today for a

ReplyDeleteprosperous future."

data science courses in malaysia

The greatest data science courses available in Malaysia are provided by 360DigiTMG. Enroll today for a prosperous future.

ReplyDeletedata science courses in malaysia

It is an interesting article. Data analysis is the future of all sectors of businesses. But have to also consider the cons. Found the article very informative. Thank you for sharing such a great blog.

ReplyDeleteData science courses in Kochi

This post truly resonated with me! Your discussion about the importance of self-compassion really struck a chord. I loved the practical tips you provided to cultivate that mindset in our daily lives. It’s such an essential aspect of personal growth that often gets overlooked. Thank you for sharing this valuable perspective—I’m excited to apply these ideas

ReplyDeleteData science courses in Gujarat

Interesting insights! It's crucial to address inefficiencies in data analysis by streamlining workflows and adopting better tools for productivity and accuracy.

ReplyDeleteData Science Courses in Singapore

This topic on the inefficiencies of data analysts is quite thought-provoking! It often seems that analysts spend a significant amount of time on data cleaning, preparation, and manual reporting rather than focusing on actual analysis and insights. Factors like inadequate tools, lack of automation, and siloed data can contribute to these inefficiencies. I believe investing in better data management tools and fostering a culture of collaboration can greatly enhance productivity. It would be interesting to explore specific strategies or tools that can help analysts streamline their workflows!

ReplyDeleteData Science Courses In Malviya Nagar

"I loved this insightful article! Data science is becoming increasingly essential for businesses. For those in Faridabad, the data science courses in Faridabad provide hands-on experience that can propel your career forward."

ReplyDeleteVery nice and informative article. Really appreciate your efforts in this.

ReplyDeleteData Science Courses in Hauz Khas

Insightful post! Identifying these time wasters in data processing is crucial for improving efficiency. I’m looking forward to the next part! Thanks for sharing your expertise

ReplyDeleteData science courses in Bhutan

what a great post. never thought there are so many drawbacks until you shared it. Great knowledge on Why are data analysts so inefficient. This will help the data analysts for sure.

ReplyDeleteOnline Data Science Course

This article offers a thorough analysis of the inefficiencies that plague data analysts, presenting a compelling case for why many organizations struggle to use their data effectively. The parallels drawn to lean manufacturing principles are particularly insightful, as they frame the discussion around the common sources of waste.

ReplyDeleteThe six identified time wasters—conveyance, inventory, waiting, over-processing, correction, and opacity—are articulated clearly and backed by real-world examples that illustrate their impact on productivity. I appreciate the focus on how these inefficiencies not only consume resources but also hinder the potential for valuable insights from data. The specific examples, such as the issues caused by moving data between spreadsheets, highlight the fragility of these processes and the need for a more robust approach.

Moreover, the article emphasizes the importance of transparency and proper documentation in data processes, a point that often gets overlooked in fast-paced work environments. I’m eager to see the upcoming posts that will delve deeper into each factor, providing actionable solutions for organizations looking to improve their data efficiency.

Overall, this article is a valuable resource for data professionals and decision-makers alike. It serves as a reminder that optimizing data processes can lead to more productive teams and better outcomes, ultimately giving companies a competitive edge in the market. Great insights!

Data science courses in Mysore

Thank you for sharing such valuable insights! I found your tips to be practical and applicable to my own life. I’m excited to implement some of these ideas!

ReplyDeleteData science courses in Mumbai

"I found this article incredibly informative. Your perspective is refreshing and thought-provoking. Keep up the great work!"

ReplyDeleteData science courses in Bangalore

For anyone looking to transition into data science or enhance their existing skills, the courses listed here are an excellent resource. It's encouraging to see such a variety of learning opportunities available in Iraq. Check out the comprehensive list of courses here to find a program that fits your goals.

ReplyDeleteData analysts may seem inefficient due to time spent on cleaning and organizing messy datasets, addressing shifting goals, or handling repetitive tasks. Streamlining workflows with automation and clear objectives can significantly improve their productivity.

ReplyDeleteData Science Course in Chennai

i love that way you are explaining terms in easy method for better and best understanding thank you for sharing great information for us, keep sharing.

ReplyDeleteData science course in Bangalore

I like how you have mentioned and highlighted the negative points. But every field and topic have pros and cons. Data science courses in chennai

ReplyDeleteThis blog gave me a fresh perspective on the topic. Your thorough explanation and clear analysis made it a highly informative read

ReplyDeleteData science courses in Bangalore

Great post! Identifying time wasters in data processes is crucial for improving efficiency. Your insights are very practical and helpful for anyone looking to streamline their workflow. Looking forward to the next part of this series!

ReplyDeleteData science courses in Bangladesh

This article was such a refreshing read! Your take on inefficiencies of data analysts is both timely and relevant. I appreciated how you backed up your points with research while still keeping the tone conversational. It’s a great balance that makes the content enjoyable and informative. Thank you for your hard work—I can’t wait to see what you write next Investment Banking Course

ReplyDeleteInsightful post! Identifying these time wasters in data processing is crucial for improving efficiency. I’m looking forward to the next part! Thanks for sharing your expertise

ReplyDeleteIIM SKILLS Data Science Course Reviews

This article is an excellent analysis of the hidden inefficiencies in data processes, bridging the principles of lean manufacturing with modern data management. digital marketing courses in delhi

ReplyDeleteExcellent post on time wasters in data processing! It’s so easy to overlook inefficiencies that slow down the entire workflow, and your tips on how to identify and eliminate them are incredibly useful. I look forward to part two of this series. Keep it up!

ReplyDeleteData science courses in pune

ReplyDeleteData inefficiency is a major issue, and understanding the six biggest time wasters—such as over-processing and waiting—can significantly improve workflow. Streamlining data processes with clear documentation and better tools will empower teams to deliver higher-quality results.

Data science courses in Mumbai

Data science courses in Mumbai

Name: INTERN NEEL

Email ID: internneel@gmail.com

Great post on Why are data analysts so inefficient! I really enjoyed how clearly you explained the topic. The examples were perfect, and I feel like I understand the subject much better now. Looking forward to more posts like this in the future!

ReplyDeletedigital marketing courses in pune

"Helpful post on data process time wasters! Looking forward to Part Two for more insights on optimizing data processing efficiency."

ReplyDeletebusiness analyst course in bangalore

Thanks for the data process info https://iimskills.com/top-23-digital-marketing-courses-in-bangalore/

ReplyDeleteThis blog post effectively highlights key inefficiencies in data processes by drawing parallels to lean manufacturing principles, making it relatable and impactful. The breakdown of the "Six Biggest Data Time Wasters" is clear, actionable, and highly relevant for organizations looking to optimize their data operations.

ReplyDeletedigital marketing course in nashik

I appreciate it very much! Looking forward to another great article. JSB Homemakers affordable luxury apartments at Nakshatra Veda Vasai Virar in Mumbai. Get 1 & 2 BHK flats with modern amenities, designed for comfort and style.

ReplyDeleteGreat post on Why are data analysts so inefficient! I really enjoyed how clearly you explained the topic. The examples were perfect Best Medical Coding Course

ReplyDeleteWe'll dispel the myth of intrinsic inefficiency by delving into the subtleties of data processing workflows, the always changing technology landscape, and the expectations for accuracy and precision. online solicitation of a minor virginia, Rather, we will emphasize the commitment, knowledge, and attention to detail that data analysts provide.

ReplyDeleteInteresting perspective about data science. Medical Coding Course

ReplyDeleteI can definitely relate to some of the time wasters you mentioned ! Your insights are spot on, and I appreciate the practical examples that illustrate each point.

ReplyDeleteMedical Coding Courses in Chennai

The connection between data inefficiencies and Taiichi Ohno’s lean manufacturing principles could be made smoother. A brief explanation of how these principles apply to data management would help.Medical Coding Courses in Kochi

ReplyDeleteThis site gives information about how data analysts are ineffecient and how to offer solutions to eliminate them.

ReplyDeleteMedical Coding Courses in Bangalore

Companies invest heavily in data, yet inefficiencies still waste resources. Inspired by lean manufacturing, six major data time wasters have been identified. This series will explore them and provide solutions for better efficiency.Medical Coding Courses in Delhi

ReplyDeleteSuch a detailed and informative read, thank you!

ReplyDeleteMedical Coding Courses in Delhi

This comment has been removed by the author.

ReplyDeleteExcellent post! Loved the clarity and details provided. Keep up the great work! Medical Coding Courses in Delhi

ReplyDeleteGreat breakdown of the hidden inefficiencies in data workflows! The parallels with lean manufacturing make the issues really clear—especially the “opacity” and “waiting” parts, which I see all the time. Looking forward to the deep dives on each waste factor

ReplyDeleteMedical Coding Courses in Delhi

Thanks, this made it easier to understand

ReplyDeleteMedical Coding Courses in Bangalore

Nice and simple explanation

ReplyDeleteMedical Coding Courses in Bangalore

thank you for sharing this stunning site

ReplyDeleteMedical Coding Courses in Delhi

thank you for sharing this stunning site

ReplyDeleteindian jewelry store online

I have really enjoyed reading your blog posts.

ReplyDeleteData Science Courses in India

Thanks for this eye-opening breakdown of data inefficiencies — the parallels with lean manufacturing really drive the point home. Your insights on conveyance and opacity especially resonated. Out of curiosity, how would you recommend smaller teams start implementing transparency in their data workflows without overwhelming their limited resources?

ReplyDeleteValuable insights! Appreciate you sharing this.

sjögren's syndrome treatment

Data analysts may appear inefficient due to fragmented data sources, unclear business objectives, or reliance on outdated tools. Efficiency improves with better data pipelines, defined KPIs, and modern analytics platforms. Bridging communication gaps between analysts and stakeholders is key to unlocking their full potential and driving timely, actionable insights.

ReplyDeleteData Science Courses in India

It's crucial to look at the environment and the processes in place. Often, empowering data analysts with the right resources, clear objectives, and efficient workflows can significantly boost their productivity and impact. Painting with a broad brush of "inefficiency" risks overlooking the systemic challenges they might be facing.

ReplyDeleteData Science Courses in India

Great post! I really appreciate how you highlighted common data process time-wasters that often go unnoticed. Identifying these bottlenecks is the first step toward more efficient workflows, and your practical advice is super helpful for anyone working with data. Looking forward to Part Two!

ReplyDeleteMedical Coding Courses in Delhi

This is a fantastic and much-needed framework—really appreciate how you’ve adapted lean manufacturing principles to the world of data. Looking forward to the deeper dives on each category. Great work making this both practical and strategic! Medical Coding Courses in Kochi

ReplyDelete